2022 FAQs

General

1. We would like to participate. What do we need to do?

Please fill out the participation intent form to list your institution, your team and the tracks you will participate in. You just need to follow the instructions and submit the form.

2. I am interested only in submitting a paper but not in the Challenge. Can I do that?

Yes. Please make sure to submit your paper by the submission deadline.

3. How large can a team be?

There are no restrictions on team size.

4. What are the rules for downloading the data set?

A participation agreement is available ahead of the data being shared. You need to accept that agreement and submit that response ahead of getting access to the data set.

5. Can I use any available data set to train models in this Challenge?

Teams that are willing to be listed in the public leader board and win the challenge awards are NOT allowed to use any external data for either training or validation. The winning teams and runners-up are required to submit their training and testing codes for verification after the challenge submission deadline. However, teams are allowed to used data across different challenge tracks.

6. What are the prizes?

This information is shared in the Awards section.

7. Will we need to submit our code?

Teams need to make there code publicly accessible to be considered for winning (including complete/reproducible pipeline for mode training/creation). This is to ensure that no external data is used for training and the tasks were performed by algorithms and not humans and contribute to the community.

8. How will the submissions be evaluated?

The submission formats for each track are detailed on the Data and Evaluation page.

The validation sets are allowed to be used in training.

10. Are we allowed to use test sets in training?

Additional manual annotations on our testing data are strictly prohibited. We also do not encourage the use of testing data in any way during training, with or without labels, because the task is supposed to be fairly evaluated in real life where we don’t have access to testing data at all. Although it is permitted to perform algorithms like clustering to automatically generate pseudo labels on the testing data, we will choose a winning method without using such techniques when multiple teams have similar performance (~1%). Finally, please keep in mind that, like all the previous editions of the AI City Challenge, all the winning methods and runners-up will be requested to submit their code for verification purposes. Their performance needs to be reproducible using the training/validation/synthetic data only.

11. Are we allowed to use data/pre-trained models from the previous edition(s) of the AI City Challenge?

Data from previous edition(s) of the AI City Challenge are considered external data. These data and pre-trained models should NOT be utilized on submissions to the public leaderboards. But it is allowed to re-train these models on the new training sets from the latest edition.

12. Are we allowed to use other external data/pre-trained models?

The use of any real external data is prohibited. There is NO restriction on synthetic data. Some pre-trained models trained on ImageNet / MSCOCO, such as classification models (pre-trained ResNet, DenseNet, etc.), detection models (pre-trained YOLO, Mask R-CNN, etc.), etc. that are not directly for the challenge tasks can be applied. Please confirm with us if you have any question about the data/models you are using.

Track 1

1. In some scenarios, some misalignment of synchronization is observed even after adding the time offset. Why does it happen?

Note that due to noise in video transmission, which is common in real deployed systems, some frames are skipped within some videos, so they are not perfectly aligned.

2. What is the format of the baseline segmentation results by Mask R-CNN? How can they be decoded.

Each line of the segmentation results corresponds to the output of detection in “train(test)///det/det_mask_rcnn.txt.”

To generate the segmentation results, we adopt the implementation of Mask R-CNN within Detectron: https://github.com/facebookresearch/Detectron

Each segmentation mask is the representation after processing vis_utils.convert_from_cls_format() within detectron/utils/vis.py. It can be visualized/displayed using other functions in the vis_utils.

3. How can we use the file ‘calibration.txt’? It is a matrix from GPS to 2D image pixel location and there are some tools about image-to-world projections in amilan-motchallenge-devkit/utils/camera, but how can we use the code correctly?

The tool we used for calibration is also publicly available here. We mainly rely on the OpenCV libraries for homography operations. For projection from GPS to 2D pixel location, you may simply apply matrix multiplication with our provided homography matrix. For the back projection from 2D pixel location to GPS, first calculate the inverse of the homography matrix (using invert() in OpenCV), and then apply matrix multiplication. The image-to-world projection methods in “amilan-motchallenge-devkit/

In the updated calibration results, the intrinsic parameter matrices and distortion coefficients are provided for fish-eye cameras. Note that though the GPS positions are represented as angular values instead of coordinates on a flat plane, since the longest distance between two cameras is very small (4 km) compared to the perimeter of the earth (40,075 km), they can still be safely viewed as a linear coordinate system.

4. How are the car IDs used for evaluation? In the training data there are ~200 IDs. But when working on the test set, the tracker may generate arbitrary car IDs. Do they need to be consistent with the ground truths? Is the evaluation based on the IOU of the tracks?

We use the same metrics as MOTChallenge for the evaluation. Please refer to the evaluation tool in the package for more details. The IDs in the submitted results do not need to match the exact IDs in the ground truths. We will use bipartite matching for their comparison, which will be based on IOU of bounding boxes.

5. We observed some cases that the ground truths and annotated bounding boxes are not accurate. What is the standard of labeling?

Vehicles were NOT labeled when: (1) They did not travel across multiple cameras; (2) They overlapped with other vehicles and were removed by NMS (Only vehicles in the front are annotated); (3) They were too small in the FOV (bounding box area smaller than 1,000 pixels); (4) They were cropped at the edge of the frames with less than 2/3 of the vehicle body visible. Additionally, the bounding boxes were usually annotated larger than normal to ensure full coverage of each entire vehicle, so that attributes like vehicle color, type and pose can be reliably extracted to improve re-identification.

6. Can we access the previous version of the MTMC tracking dataset (CityFlow instead of CityFlowV2)?

The previous version of the CityFlow dataset has been included in the latest version, as the previous test set is the current validation set. The evaluation code is provided.

Track 2

1. What is UUID?

2. Can I use pre-trained natural language models?

Yes. Any NLP models that are not trained specifically for the CityFlow Benchmark are allowed for Track 5.

3. Where I can find the baseline model?

The repository is available on GitHub: https://github.com/fredfung007/cityflow-nl

The arXiv paper of the CityFlow-NL dataset and the baseline model is available here.

Track 3

1. Can we use dataset A2 for supervised or semi-supervised training on our algorithms?

Same as for other tracks, here for track3 the data set A2 (provided to teams with no label) should be used as validation only which means any sort of training (manual labeling, semi-supervise labeling) using dataset A2 is prohibited.

2. Why it is sometimes out of sync between the three camera view?

The videos are synced at the start, however since there are some video concatenation happened during the video creation process, it may be slightly out of sync in the later part but should not be more than one second.

3. Does the activity id start at 0 or 1?

There was a discrepancy between the track description and the label file in the dataset. To confirm, the activity id starts at 0 and the info on the track description page has been updated accordingly.

Track 4

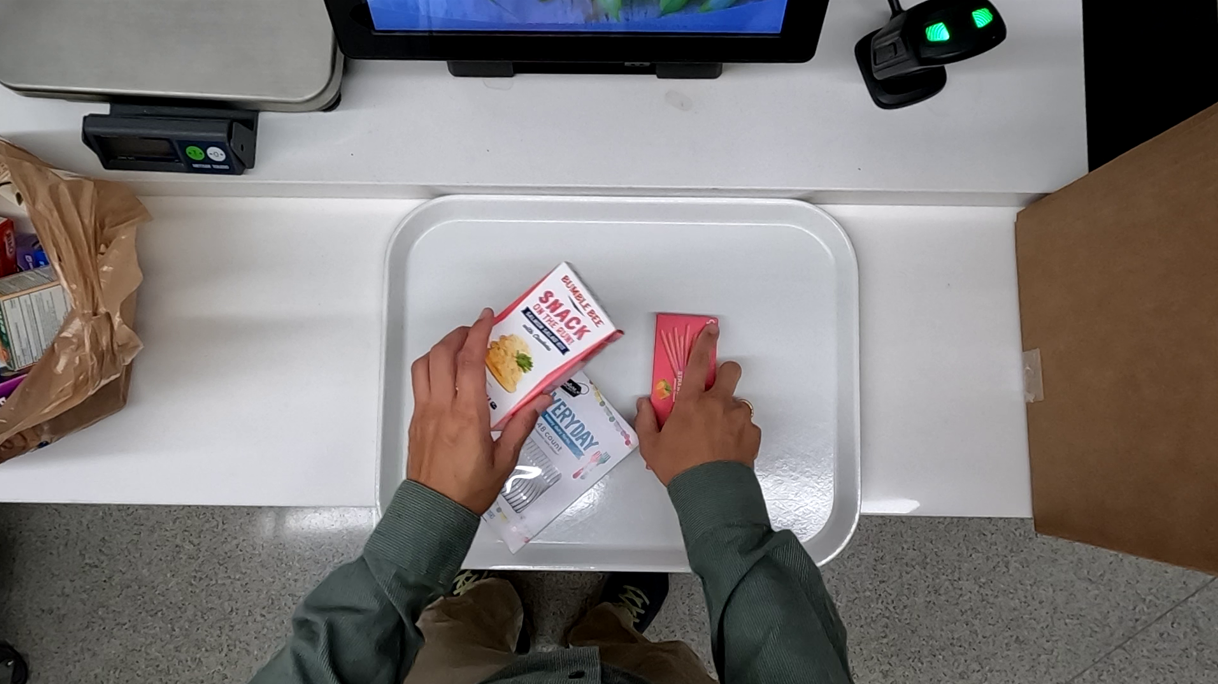

1. What is an automated checkout process look like?

The following figure illustrates a snapshot of our testing videos mimicking an automated checkout process.

2. Can the test set A be used for training models with methods such as pseudo-label, semi-supervision, or self-supervision?

The test set can’t be used. We do not limit or recommend how participants should detect the tray but teams should keep in mind that the camera view might change slightly within a recorded test video.