2025 Challenge Track Description

Track 4: Road Object Detection in Fish-Eye Cameras

Fisheye lenses have gained popularity owing to their natural, wide, and omnidirectional coverage, which traditional cameras with narrow fields of view (FoV) cannot achieve. In traffic monitoring systems, fisheye cameras are advantageous as they effectively reduce the number of cameras required to cover broader views of streets and intersections. Despite these benefits, fisheye cameras present distorted views that necessitate a non-trivial design for image undistortion and unwarping or a dedicated design for handling distortions during processing. It is worth noting that, to the best of our knowledge, there is no open dataset available for fisheye road object detection for traffic surveillance applications. The datasets (FishEye8K and FishEye1Keval) comprises different traffic patterns and conditions, including urban highways, road intersections, various illumination, and viewing angles of the five road object classes in various scales.

- Data

We use the same train (the train set of the FishEye8K), validation (the test set of the FishEye8K), and test (FishEye1Keval) sets employed in our previous challenge, Track 4, The AI City Challenge 2024.

The FishEye8K dataset is published in CVPRW23. The training set has 5288 images, and the validation set has 2712 images with resolutions of 1080×1080 and 1280×1280. The two sets have a total of 157K annotated bounding boxes of 5 road object classes (Bus, Bike, Car, Pedestrian, Truck). Dataset labels are available in three different formats: XML (PASCAL VOC), JSON (COCO), and TXT (YOLO).

The test dataset FishEye1Keval contains 1000 images similar to the training dataset images; however, extracted from 11 camera videos which were not utilized in the making of FishEye8K dataset.

- Eco-Friendly & Real-Time Edge Solution

This year, we promote an eco-friendly, real-time edge computing solution, where participants must submit a Docker container with a real-time running framework optimized (ONNX or TensorRT) for Jetson devices. Owning a Jetson device is not mandatory for participation.

Teams that wish to compete for the track prizes and that submit problem solutions to the public leaderboard must ensure that their framework/model achieves at least an efficiency of 10 FPS on a Jetson AGX Orin 32GB edge device. While submitting FPS scores or other efficiency metrics is not required during the challenge, teams will need to submit a Docker container that will be used to evaluate the efficiency and effectiveness of their model on a Jetson AGX Orin 32GB. Submissions of teams that achieve less than 10 FPS during that evaluation will be disqualified.

- Evaluation Metric

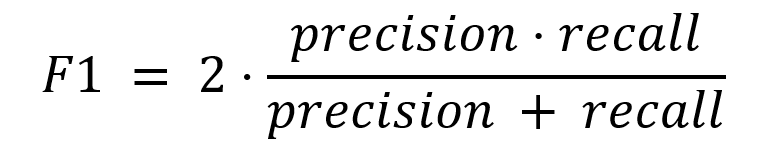

For the leaderboard, the F1-score, the same metric used in the previous year’s challenge, will be employed as equated below:

F1 is a harmonic mean of total precision and recall (not cumulative, that is used in plotting the PR curve). Evaluation scripts (eval_f1.py) for Windows and Linux are available here.

After the challenge deadline, we will assess the Docker submissions and choose the winning teams from the Top 5-10 leaderboard entries. Teams will be disqualified if their framework fail to meet the minimum 10 FPS requirement.

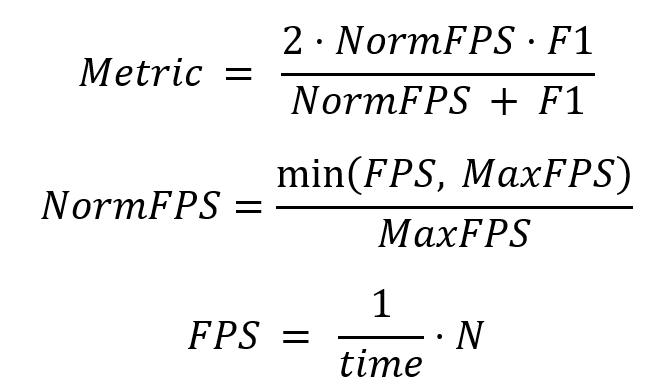

The primary metric will be the harmonic mean of the F1-score and FPS (frames per second, encompassing all framework processes), calculated as follows:

Where:

- MaxFPS = 25 as it is sufficient for a real-time application.

- N = 1000 is the number of images in the test set.

- FPS is the average number of frames processed per second for all 1000 images in the FishEye1Keval dataset.

- time denotes the total elapsed real time for processing all 1000 images. Ensure that time begins immediately before the first image is processed and ends after the last image is fully processed.

- Submission Format

Teams should submit the a JSON file containing each dictionary, details of a detected object, and corresponding class ID and bounding box. The submission format schema to be followed is as follows:

[ { "image_id": , "category_id": , "bbox": [x1, y1, width, height], "score": }, // Add more detections as needed ]

How to create an image ID?

def get_image_Id(img_name):

img_name = img_name.split('.png')[0]

sceneList = ['M', 'A', 'E', 'N']

cameraIndx = int(img_name.split('_')[0].split('camera')[1])

sceneIndx = sceneList.index(img_name.split('_')[1])

frameIndx = int(img_name.split('_')[2])

imageId = int(str(cameraIndx)+str(sceneIndx)+str(frameIndx))

return imageIdAbove shows basic code that converts image name to image ID, which is useful when you create the submission file.

For example:

- If image name is “camera1_A_12.png” then cameraIndx is 1, sceneIndx is 1, frameIndx is 12 and imageId is 1112.

- If image name is “camera5_N_431.png” then cameraIndx is 5, sceneIndx is 3, frameIndx is 431 and imageId is 53431.

Category IDs:

The numbering of category IDs are as follows:

| Class names | Bus | Bike | Car | Pedestrian | Truck |

| Category ID | 0 | 1 | 2 | 3 | 4 |

- Additional Datasets

Teams aiming for the public leaderboard and challenge awards must not use non-public datasets in any training, validation, or test sets. Winners and runners-up must submit their training and testing codes for verification after the deadline to confirm no non-public data was used, ensuring tasks were algorithm-driven, not human-performed.

- Data Access

AIC25 Track4 data can be accessed here.