2021 FAQs

General

1. We would like to participate. What do we need to do?

Please fill out the participation intent form to list your institution, your team and the tracks you will participate in. You just need to follow the instructions and submit the form.

2. I am interested only in submitting a paper but not in the Challenge. Can I do that?

Yes. Please make sure to submit your paper by the submission deadline.

3. How large can a team be?

There are no restrictions on team size.

4. What are the rules for downloading the data set?

A participation agreement is available ahead of the data being shared. You need to accept that agreement and submit that response ahead of getting access to the data set.

5. Can I use any available data set to train models in this Challenge?

Teams that are willing to be listed in the public leader board and win the challenge awards are NOT allowed to use any external data for either training or validation. The winning teams and runners-up are required to submit their training and testing codes for verification after the challenge submission deadline. However, teams are allowed to used data across different challenge tracks, e.g., the synthetic data can be used for both Track 2 and Track 3. There is one exception: The data of Track 3 should NOT be applied to the task of Track 2.

6. What are the prizes?

This information is shared in the Awards section.

7. Will we need to submit our code?

Winning teams and runners-up are required to submit their training and testing code for verification after the challenge deadline, in order to ensure that no external data is used for training and the tasks were performed by algorithms and not humans.

8. How will the submissions be evaluated?

The submission formats for each track are detailed on the Data and Evaluation page.

The validation sets are allowed to be used in training.

10. Are we allowed to use test sets in training?

Additional manual annotations on our testing data are strictly prohibited. We also do not encourage the use of testing data in any way during training, with or without labels, because the task is supposed to be fairly evaluated in real life where we don’t have access to testing data at all. Although it is permitted to perform algorithms like clustering to automatically generate pseudo labels on the testing data, we will choose a winning method without using such techniques when multiple teams have similar performance (~1%). Finally, please keep in mind that, like all the previous editions of the AI City Challenge, all the winning methods and runners-up will be requested to submit their code for verification purposes. Their performance needs to be reproducible using the training/validation/synthetic data only.

11. Are we allowed to use data/pre-trained models from the previous edition(s) of the AI City Challenge?

Data from previous edition(s) of the AI City Challenge are considered external data. These data and pre-trained models should NOT be utilized on submissions to the public leaderboards. But it is allowed to re-train these models on the new training sets from the latest edition.

12. Are we allowed to use other external data/pre-trained models?

The use of any real external data is prohibited. There is NO restriction on synthetic data. Some pre-trained models trained on ImageNet / MSCOCO, such as classification models (pre-trained ResNet, DenseNet, etc.), detection models (pre-trained YOLO, Mask R-CNN, etc.), etc. that are not directly for the challenge tasks can be applied. Please confirm with us if you have any question about the data/models you are using.

Track 1

1. What is the definition of “cars” and “trucks”? Should we count pickup trucks and vans as “trucks”?

Pickup trucks and vans should be counted as “cars” and the “truck” class mostly refers to freight trucks.

To be more specific, the following type of vehicles should be counted as “car”: sedan car, SUV, van, bus, small trucks such as pickup truck, UPS mail trucks, etc.

And the following type of vehicles should be counted as “truck”: medium trucks such as moving trucks, garbage trucks. large trucks such as tractor trailer, 18-wheeler, etc.

2. I would like to clarify on the frame_id in the submission file. From the ground truth this frame_id seems to be the moment when the car/truck leaves the ROI but will there be a certain range of frames for a TP count of the vehicle?

The ROI is used in this track to remove the ambiguity that whether a certain vehicle should be counted or not especially near the start and end of a video segment. The rule is like this: any vehicle present in the ROI is eligible to be counted and a certain vehicle is counted at the moment of fully exiting the ROI. By following the rule, two people manually counting the same video should yield the same result. All ground truth files are manually created following this rule. During evaluation, a certain buffer will be applied to ensure that a couple frames of difference would not affect the final score. The detailed evaluation information will come up later.

3. Does efficiency_base.py cover the usage of multi-gpu and multi-cpu?

The script takes multi-cpu into account but not multi-gpu. The script is not full-proof but is the best we can do without the teams having access to the same execution system. However, the prize evaluation will be done on Dataset B and will be using the same system for all teams, which will remove the discrepancy. Results on Dataset A are only indicative to the users of the general execution trend of their method in comparison to other methods of other teams.

4. In the formula for calculating wRMSE, could you please illustrate what are i,j,k refer to?

k is the number of segments for that video. The weights applied to the RMSE are the index of the segment, normalized by the sum of the weights.

For example:

k = 10

w = (np.arange(1, k+1)*2)/(k**2+k)

w = array([0.01818182, 0.03636364, 0.05454545, 0.07272727, 0.09090909, 0.10909091, 0.12727273, 0.14545455, 0.16363636, 0.18181818])

5. We would like to confirm, whether each video in the Dataset B comes from the same 20 cameras as in the Dataset A? Can we adopt camera-specific detection/tracking strategies?

Yes, all videos in the Dataset B come from the exact same 20 cameras as in the DatasetA and follow the exact same naming conventions. The submitted program should be able to load the configs for each camera (ROIs, MOIs, etc) based on the video names thus it can be directly applied on Dataset B. If there are camera-specific detection/tracking strategies baked in the program it is totally OK and should work directly on Dataset B.

6. Since sometimes the vehicles are not visible when exiting the ROI due to occlusions, when should we count those vehicles? Should we count the cars that been occluded during the entrance of ROI but become visible from the middle area of the Intersection?

As explained in the question #2 under Track 1, during the manual labeling, we are trying the best to record each vehicle at the time of fully exiting the ROI by human judgement even when occlusion occurs. Teams should follow the same principle. Also as clarified in question #2 under Track 1, “any vehicle present in the ROI is eligible to be counted”, thus “a vehicle occluded during the entrance of the ROI but are visible in the ROI” needs to be counted.

7. What is <gen_time> exactly? How would it be used in the online evaluation with the requirement: “At any given time t, assuming the program execution start as t_0, any output for video frames outside the range [max(t_0, t-15s), t] will be ignored in the online evaluation.”?

Gen time is the number of seconds from the time you started the script processing the video until the result was output to the file. It is a float, rather than an int. You may Use, for example, datetime.now() to get a starting timestamp at the start of your script’s execution and subtract it from the datetime.now() timestamp at the time of writing the result, then output the result in seconds.

gen_time is relative to the execution of each video. If you process multiple videos in your script, get a video-specific timer for the start of processing (reading) each video. Note that model loading should be included in this time too. With that being said, the gen_time is supposed to be per video, as if each video would be executed independently. It should be reset when the second video starts loading or execute the script independently for each video.

In the online evaluation, the gen_time will be compared against frame_id to determine if the corresponding result should be ignored. Assuming 10 fps (note that different videos in the dataset have different fps values), if your gen_time for frame 1 is > 15.0, it will be ignored. Similarly, if your gen_time for frame 11 is greater than 16.0, it will be ignored. As long as each frame’s result is processed and output in less than 15 seconds from the time it would have been read (if streaming the video in real time), its output will count.

Track 2

1. Does the order of the k matches for each query matter in the submission file?

YES. To achieve higher accuracy score, your algorithm must be able to rank more true positives on top.

2. Why is there a rule that prevents using external data for training and testing?

To clarify, our goal is to ensure fairness across all levels by restricting the available resources. Moreover, real data are expensive to collect and annotate, and it is impossible to capture all scenarios in real world. Therefore, the data of Track 3 (MTMCT) are NOT allowed to be used for the task of Track 2 (ReID). Like other mainstream benchmarks of ReID, e.g., MARS, VeRi, etc, the participants should NOT have access to the original videos and spatio-temporal information. Using synthetic data is a potential approach to overcome the lack of real data. Teams are encouraged to leverage the provided synthetic data as much as possible when designing your methods.

3. Can we access the previous version of the ReID dataset (CityFlow-ReID instead of CityFlowV2-ReID)?

Yes. Please submit the AI City Challenge 2020 Datasets Request Form and indicate you need access to the Track 2 data. In the body of the email to aicitychallenges@gmail.com, please specifically mention that you request for the CityFlow-ReID version instead of CityFlowV2-ReID. For the evaluation on CityFlow-ReID, please send the results also to aicitychallenges@gmail.com. Each team will only have three opportunities to evaluate on the old test set.

Track 3

1. In some scenarios, some misalignment of synchronization is observed even after adding the time offset. Why does it happen?

Note that due to noise in video transmission, which is common in real deployed systems, some frames are skipped within some videos, so they are not perfectly aligned.

2. What is the format of the baseline segmentation results by Mask R-CNN? How can they be decoded.

Each line of the segmentation results corresponds to the output of detection in “train(test)///det/det_mask_rcnn.txt.”

To generate the segmentation results, we adopt the implementation of Mask R-CNN within Detectron: https://github.com/facebookresearch/Detectron

Each segmentation mask is the representation after processing vis_utils.convert_from_cls_format() within detectron/utils/vis.py. It can be visualized/displayed using other functions in the vis_utils.

3. How can we use the file ‘calibration.txt’? It is a matrix from GPS to 2D image pixel location and there are some tools about image-to-world projections in amilan-motchallenge-devkit/utils/camera, but how can we use the code correctly?

The tool we used for calibration is also publicly available here. We mainly rely on the OpenCV libraries for homography operations. For projection from GPS to 2D pixel location, you may simply apply matrix multiplication with our provided homography matrix. For the back projection from 2D pixel location to GPS, first calculate the inverse of the homography matrix (using invert() in OpenCV), and then apply matrix multiplication. The image-to-world projection methods in “amilan-motchallenge-devkit/

In the updated calibration results, the intrinsic parameter matrices and distortion coefficients are provided for fish-eye cameras. Note that though the GPS positions are represented as angular values instead of coordinates on a flat plane, since the longest distance between two cameras is very small (4 km) compared to the perimeter of the earth (40,075 km), they can still be safely viewed as a linear coordinate system.

4. Do we need to consider the condition that a car appears in multiple scenarios?

In Track 3, there is no need to consider vehicles appearing across scenarios, so that the provided camera geometry can be utilized for cross-camera tracking. But in Track 2, all the IDs across cameras are mixed in the training set and the test set, which is a different problem to solve.

5. How are the car IDs used for evaluation? In the training data there are ~200 IDs. But when working on the test set, the tracker may generate arbitrary car IDs. Do they need to be consistent with the ground truths? Is the evaluation based on the IOU of the tracks?

We use the same metrics as MOTChallenge for the evaluation. Please refer to the evaluation tool in the package for more details. The IDs in the submitted results do not need to match the exact IDs in the ground truths. We will use bipartite matching for their comparison, which will be based on IOU of bounding boxes.

6. We observed some cases that the ground truths and annotated bounding boxes are not accurate. What is the standard of labeling?

Vehicles were NOT labeled when: (1) They did not travel across multiple cameras; (2) They overlapped with other vehicles and were removed by NMS (Only vehicles in the front are annotated); (3) They were too small in the FOV (bounding box area smaller than 1,000 pixels); (4) They were cropped at the edge of the frames with less than 2/3 of the vehicle body visible. Additionally, the bounding boxes were usually annotated larger than normal to ensure full coverage of each entire vehicle, so that attributes like vehicle color, type and pose can be reliably extracted to improve re-identification.

7. Can we access the previous version of the MTMC tracking dataset (CityFlow instead of CityFlowV2)?

The previous version of the CityFlow dataset has been included in the latest version, as the previous test set is the current validation set. The evaluation code is provided.

Track 4

1. In the instructions of the submission format there is an ambiguity concerning the value . Does it refer to the time at which an anomaly starts (is detected) or the time at which the anomaly score is the highest? Moreover, what happens with the duration of an anomaly? Are we interested in this information during evaluation process? Is this incorporated in a way in the aforementioned timestamp value?

Teams should indicate only the starting point of the anomaly, e.g., when the first vehicle hit another vehicle or ran off the road. The duration of the anomaly does not need to be reported.

2. Concerning videos with multiple anomalies, if a second anomaly occurs while the first anomaly is still in progress should we identify it as a new anomaly?

No, only one anomaly should be reported. We have clarified the evaluation page to reflect this. In particular, if a second anomaly happens within 2 minutes of the first, it should be counted the same anomaly as the first (i.e., a multi-car pileup is treated as one accident).

3. Finally in the submission file, should we send only the anomalies detected or the top 100 scores, concerning the most possible abnormal events or could it be less/more?

You should not submit more than 100 predicted anomalies. The evaluation strategy is designed to penalize false positives. As such you should only submit N high confidence results, where N <= 100.

4. For the “timestamp”, what kind of format should we follow? In your evaluation page, you mention “ is the relative time, in seconds, from the start of the video (e.g., 12.3456)” But in you training data, you give “2 587 894”. Which one is correct? “587” vs “12.2456”?

The timestamp 587 refers to 587.0 seconds from the onset of the video, i.e. 9 minutes and 47 seconds into the video. If you look at video 2, you will see a car stopping on the side of the road around 9 minutes and 47 seconds into the video. As specified in the evaluation page, the submission file should contain the timestamp as float.

5. If our confidence scores are all binary value, like 0 or 1 of each frame, how do you handle this case?

The confidence score should be between 0 and 1. It is not currently used in the evaluation but may be used in the future. As such, it would be beneficial to include confidence scores if possible.

6. Are there any clear definition of anomaly detection? If there is a vehicle suddenly stop on the road, the starting time is count from the vehicle slowing down or stop completely. For the training data 11.mp4, I don’t know where is the anomaly.

There is no exact definition of anomaly but basically it refers to anything we don’t expect to happen normally. The most frequent anomalies shown in the training set are stalled vehicles and crashes.

For a stalled vehicle, the anomaly start time is the time when the vehicle comes to a complete stop. For a single vehicle crash or a multiple vehicle crash, the start time is the time instant when the first crash occurs.

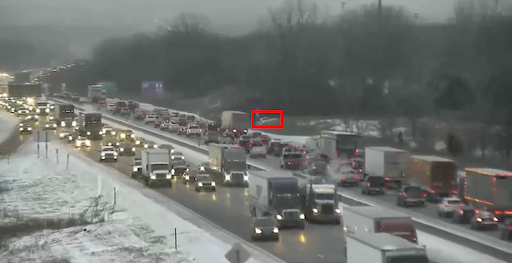

In training data 11.mp4, there is a stalled vehicle in the ditch. Please refer to the attached image.

7. Is it possible to provide the GPS locations of the cameras that took the videos in Track 3? It would help to get the calibration done.

The cameras that have been used to record the videos of Track 3 are PTZ type (Pan, Tilt, Zoom). Hence, the camera orientation and zoom can change dynamically. So, the GPS locations of the cameras are not provided.

1. What is UUID?

2. Can I use pre-trained natural language models?

Yes. Any NLP models that are not trained specifically for the CityFlow Benchmark are allowed for Track 5.

3. Where I can find the baseline model?

The repository is available on GitHub: https://github.com/fredfung007/cityflow-nl

The arXiv paper of the CityFlow-NL dataset and the baseline model is available here.